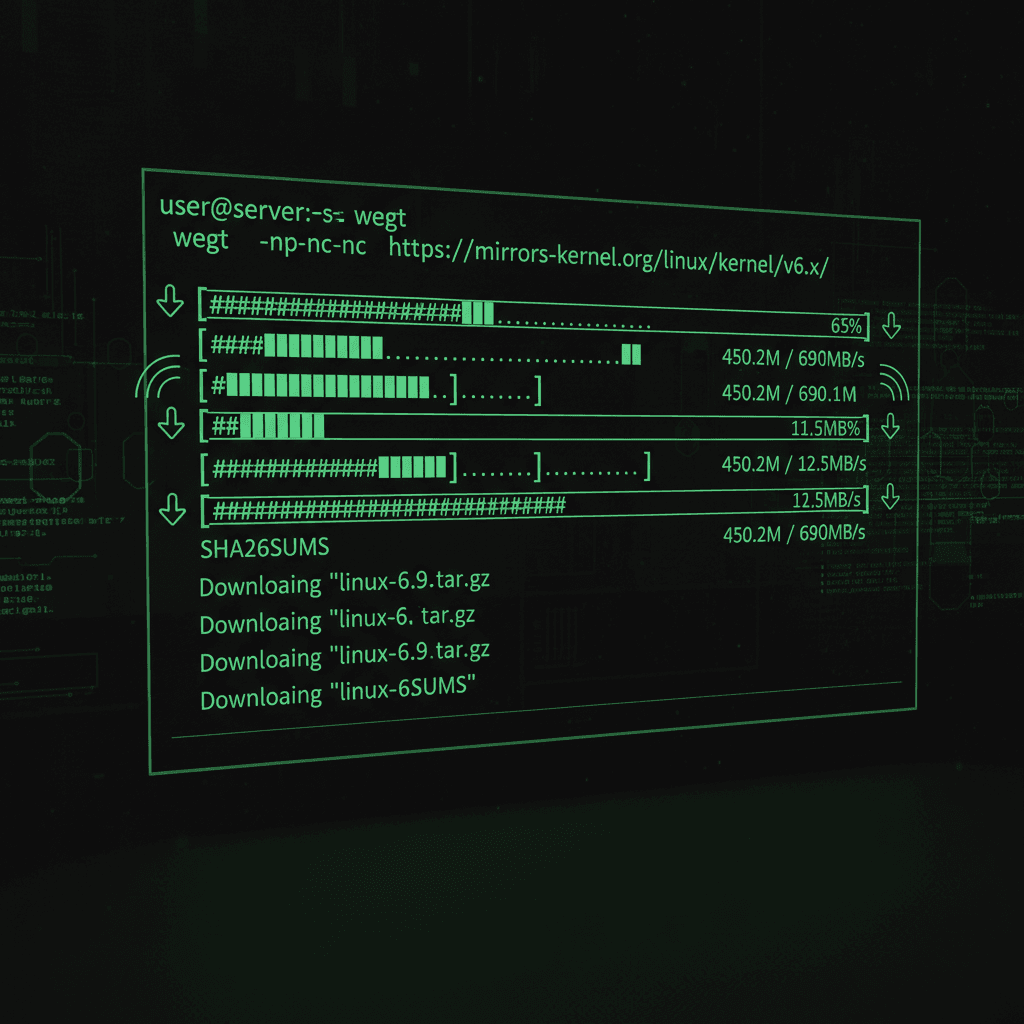

If you’ve ever needed to grab a file from a remote server without a graphical interface, you’ve probably met wget. This workhorse of a command has saved my bacon more times than I can count. Whether I’m pulling down ISO files, mirroring documentation for offline access, or scripting automated backups, the wget command in Linux remains my go-to tool.

Let me walk you through everything you need to know about wget. We’ll cover the basics, dig into advanced features, and I’ll share some real scenarios where this tool shines brightest.

What is the wget Command in Linux?

The wget command is a non-interactive network downloader. It retrieves files from web servers using HTTP, HTTPS, and FTP protocols. The “non-interactive” part matters. Unlike browser downloads, wget doesn’t need you clicking buttons or watching progress bars.

This makes wget perfect for:

- Scripts and automation: Downloads happen without user intervention

- Server environments: No GUI required

- Unreliable connections: wget handles interruptions gracefully

- Background operations: Start a download and walk away

I remember setting up a new server years back. No desktop environment, just a blinking cursor. Needed to grab some packages from a vendor site. wget made that possible without installing anything extra. It’s usually already there on most Linux distributions.

Get a VPS from as low as $11/year! WOW!

How wget Differs from Browser Downloads

Your browser is interactive. It waits for clicks, shows pop-ups, and expects you to babysit the process. wget operates differently. Point it at a URL, press enter, and it handles everything.

The real advantage? wget survives network drops. If your connection hiccups mid-download, wget can pick up where it left off. Try doing that with a browser download of a 4GB ISO file.

wget vs curl: When to Use Which

This question comes up constantly. Both tools download files. Both work from the command line. But they’re built for different purposes.

- wget: Download-focused. Best for grabbing files and mirroring sites.

- curl: Data transfer-focused. Best for API interactions and complex HTTP requests.

wget has recursive downloading built in. It can follow links and grab entire directory structures. curl doesn’t do this natively. On the flip side, the curl command for API interactions supports more protocols and gives you finer control over HTTP requests.

For a deeper technical breakdown, the curl maintainer’s comparison offers an authoritative perspective from someone who knows both tools inside out.

My rule: downloading files? wget. Talking to APIs? curl.

Installing wget on Linux

Good news. Most Linux distributions ship with wget pre-installed. But if yours doesn’t, installation takes seconds.

Ubuntu/Debian

sudo apt update

sudo apt install wgetRHEL/CentOS/Fedora

sudo dnf install wgetOn older RHEL systems, use yum instead of dnf.

Verify Installation

wget --versionYou should see version information and compile options. For the complete technical reference, check the wget man page.

Basic wget Command Syntax and Usage

The basic structure is straightforward:

wget [options] [URL]At its simplest, just give wget a URL:

wget https://example.com/file.tar.gzThis downloads the file to your current directory with its original filename.

Specifying Output Filename

Want a different filename? Use -O:

wget -O custom-name.tar.gz https://example.com/file.tar.gzCapital O, not zero. I’ve made that typo more times than I’d like to admit.

Essential wget Options You’ll Actually Use

The official GNU wget documentation lists dozens of options. Most you’ll never touch. Here are the ones that actually matter.

Resume Interrupted Downloads with -c

This is critical for large files. If your download stops for any reason:

wget -c https://example.com/huge-file.isoThe -c flag tells wget to continue from where it left off. Without this, you’d start over from scratch.

Download in Background with -b

Starting a big download before leaving for the day? Run it in the background:

wget -b https://example.com/large-file.zipwget creates a file called wget-log to track progress. Essential for long downloads over SSH sessions.

Limit Download Speed with –limit-rate

On a shared server? Be a good neighbor:

wget --limit-rate=500k https://example.com/file.zipThis caps the download at 500 kilobytes per second. Adjust based on your available bandwidth.

Save to Specific Directory with -P

wget -P /home/user/downloads https://example.com/file.zipBefore downloading large files, consider checking available disk space first.

Quiet Mode with -q

For scripts where you don’t need progress output:

wget -q https://example.com/file.zipUseful when wget runs inside automated workflows.

Downloading Multiple Files with wget

Got a list of files to download? Don’t run wget manually for each one.

Using -i to Read URLs from File

Create a text file with one URL per line:

https://example.com/file1.zip

https://example.com/file2.zip

https://example.com/file3.zipThen point wget at it:

wget -i url-list.txtwget processes each URL automatically. Combine with -c and -P for robust batch downloads.

Recursive Downloads and Website Mirroring

This is where wget really separates itself from other tools. Recursive downloading follows links and grabs entire directory structures.

Basic Recursive Download

wget -r https://example.com/docs/The -r flag enables recursion. wget follows links and downloads what it finds.

Controlling Recursion Depth

Without limits, recursive downloads can spiral out of control. Use -l to set maximum depth:

wget -r -l 2 https://example.com/docs/This goes two levels deep and stops. Think of it like counting clicks from your starting page.

Mirroring Websites

The -m flag is shorthand for mirroring with sensible defaults:

wget -m https://example.com/docs/This enables recursion, timestamping, and infinite depth. Add --convert-links to make the downloaded site browsable offline:

wget -m --convert-links https://example.com/docs/-np (no parent) to prevent wget from climbing into parent directories.Authentication and Secure Downloads

Not all files sit on public servers. wget handles authentication for protected resources.

HTTP Basic Authentication

wget --http-user=username --http-password=password https://example.com/protected/file.zipFor security, avoid putting passwords directly in commands. They show up in shell history. Consider using .wgetrc configuration file instead.

SSL Certificate Handling

Enterprise environments sometimes trigger SSL errors. The --no-check-certificate flag bypasses verification:

wget --no-check-certificate https://internal-server/file.zipUse this cautiously. It disables important security checks. For secure remote connections, SSH-based tools often work better than HTTP with disabled verification.

Advanced wget Features for Power Users

Finding Broken Links with –spider

Spider mode checks if URLs exist without downloading:

wget --spider https://example.com/file.zipUseful for link validation scripts. Combine with grep for filtering output when checking multiple URLs.

File Filtering

Download only specific file types:

wget -r --accept=pdf https://example.com/docs/Or exclude certain types:

wget -r --reject=jpg,png https://example.com/docs/Timestamping with -N

Only download files newer than local copies:

wget -N https://example.com/file.zipPerfect for keeping mirrors in sync without re-downloading unchanged files.

Automating Downloads with wget and Cron

wget pairs beautifully with scheduled tasks. Download reports every night, sync documentation weekly, or grab log files automatically.

Simple wget Script

#!/bin/bash

wget -q -P /home/user/reports/ https://example.com/daily-report.csv

echo "Download complete: $(date)" >> /var/log/report-downloads.logSchedule this with cron for automating with cron jobs:

0 6 * * * /home/user/scripts/download-reports.shThis runs every morning at 6 AM.

Logging for Monitoring

Use -o to specify a log file:

wget -o /var/log/wget.log https://example.com/file.zipUse -a to append to existing logs instead of overwriting.

Common wget Errors and Troubleshooting

Things don’t always work smoothly. Here’s how to handle common issues.

Connection Timeouts

For unreliable networks, adjust timeout and retry settings:

wget --timeout=30 --tries=5 https://example.com/file.zipAdd --retry-connrefused for servers that occasionally refuse connections.

403 Forbidden Errors

Some servers block wget’s default user-agent. Try spoofing a browser:

wget --user-agent="Mozilla/5.0" https://example.com/file.zip404 Not Found

The file doesn’t exist. Double-check the URL. Use --spider to test URLs before building download scripts.

When NOT to Use wget

wget excels at downloading files. It’s not the right tool for everything.

- API interactions: curl handles REST APIs better. wget lacks proper support for POST, PUT, DELETE methods.

- Complex HTTP requests: Need custom headers, cookies, or JSON payloads? curl gives you more control.

- Bidirectional sync: For keeping directories in sync both ways, rsync for bidirectional file transfers is purpose-built for the job.

- Upload operations: wget downloads. For uploads, look elsewhere.

Knowing when NOT to use a tool matters as much as knowing how to use it.

Real-World wget Use Cases

After years of using wget, these scenarios come up repeatedly:

- Downloading software releases: Grab ISOs, tarballs, and packages from vendor sites

- Documentation mirrors: Keep offline copies of critical documentation

- Automated data collection: Pull daily reports or data feeds for analytics pipelines

- Site backups: Mirror small websites before migrations

- Batch dataset downloads: Research data often comes as lists of URLs

wget is one of those tools that feels simple until you need it. Then you’re grateful it exists. Master these fundamentals, and you’ll handle most download scenarios that come your way. For testing your wget skills on a fresh server, budget hosting options give you a safe playground to experiment.

Start with basic downloads. Add options as needed. Before long, wget becomes second nature.