I’ve lost count of how many times the sort command has pulled me out of a tight spot. Whether I’m analyzing server logs, cleaning up configuration files, or just trying to make sense of a CSV export, sort is one of those commands that seems simple until you need it to do something specific. Then you realize it’s way more powerful than you thought.

Here’s the thing about sort: everyone knows it exists, but most people only use about 10% of what it can do. I spent my first two years as a sysadmin thinking sort just arranged things alphabetically. Then I watched a senior engineer use it to identify duplicate database entries during a migration, and I realized I’d been missing out on a seriously useful tool.

Let me walk you through how sort actually works, the options you’ll use constantly, and the gotchas that will absolutely trip you up if you don’t know they’re coming.

What is the Sort Command in Linux?

The sort command does exactly what its name suggests: it takes input (usually from a file or pipe) and outputs the lines in a specific order. By default, it sorts alphabetically from A to Z, but that’s just scratching the surface.

The basic syntax looks like this:

sort [OPTIONS] [FILE]Without any options, sort reads from a file (or standard input), sorts the lines, and prints the result to standard output. Your original file stays untouched, which is a relief when you’re working with production data.

Why Sort Matters More Than You Think

In my daily work, I probably use sort in at least a dozen different contexts. Here are the scenarios where it’s saved my bacon:

- Log analysis: Sorting timestamps to find patterns in error bursts

- Finding duplicates: Combined with uniq to spot repeated entries in user lists or config files

- CSV processing: Organizing spreadsheet exports by specific columns before importing to databases

- Performance monitoring: Sorting process memory usage to find the biggest resource hogs

- Configuration management: Ensuring consistent ordering in files managed by version control

The beauty of sort is that it plays nicely with pipes. You can chain it with grep, awk, and other text processing tools to build powerful one-liners.

Basic Sort Examples You’ll Actually Use

Let’s start with the fundamentals. I’m going to show you the examples I find myself typing over and over.

Simple Alphabetical Sorting

The most basic use case: sorting a file alphabetically.

sort names.txtThis reads names.txt, sorts the lines alphabetically, and prints them to your terminal. If you want to save the sorted output to a new file:

sort names.txt > names_sorted.txtOr, use the -o option (which is safer because it handles the original file correctly even if you’re sorting it in place):

sort -o names_sorted.txt names.txtSorting in Reverse Order

Need to reverse the sort order? Add the -r flag:

sort -r names.txtThis is particularly useful when you’re looking for the highest values in a list, like finding which processes are consuming the most memory.

Removing Duplicates While Sorting

The -u option (unique) removes duplicate lines from your output:

sort -u usernames.txtI use this constantly when cleaning up lists. It’s faster than piping to uniq, though there are subtle differences between sort -u and sort | uniq that matter in certain situations.

Numeric Sorting: The Gotcha That Gets Everyone

Here’s where sort trips up almost every beginner. Let’s say you have a file with numbers:

100

20

3

45If you run sort numbers.txt, you’ll get this:

100

20

3

45Wait, what? That’s not sorted at all! Actually, it is. By default, sort uses lexicographical (dictionary) order, not numeric order. It compares character by character, so “100” comes before “20” because the first character ‘1’ comes before ‘2’.

To fix this, use the -n option for numeric sorting:

sort -n numbers.txtNow you get the expected result:

3

20

45

100I can’t tell you how many times I’ve forgotten this flag when sorting IP addresses or analyzing disk usage. It’s the number one mistake I see junior admins make.

Human-Readable Number Sorting

When you’re dealing with output from commands like du -h that show sizes like “1.5G” or “230M”, use the -h option:

du -h /var/log/* | sort -hThis understands suffixes like K, M, G, T and sorts them correctly. Super useful when you’re checking disk usage and want to find the biggest offenders quickly.

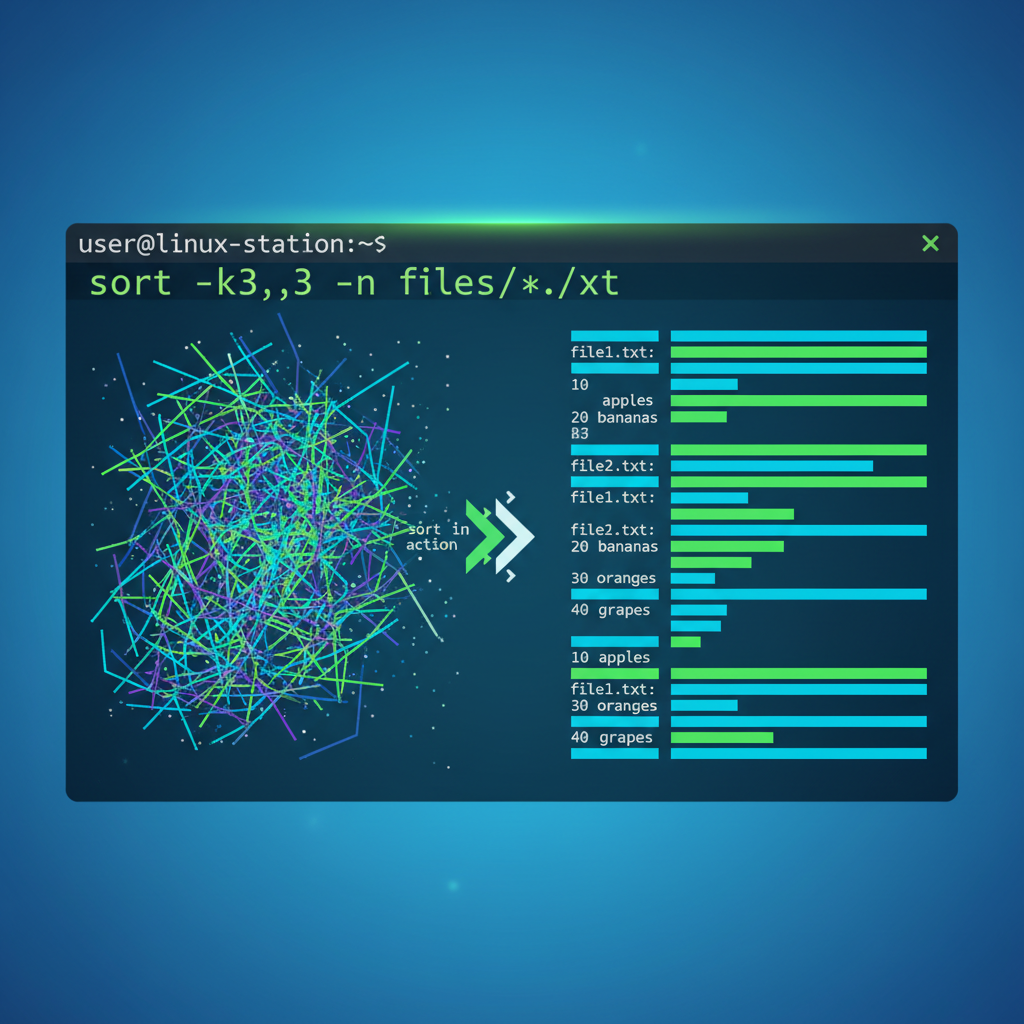

Sorting by Specific Fields (Columns)

This is where sort becomes genuinely powerful. Most of the time, you’re not sorting entire lines. You’re sorting by a specific column in structured data.

The -k option lets you specify which field to sort by. By default, fields are separated by whitespace (spaces or tabs).

Basic Field Sorting

Let’s say you have a file with usernames and login counts:

alice 42

bob 15

charlie 103

david 8To sort by the second column (login counts) numerically:

sort -k2 -n logins.txtResult:

david 8

bob 15

alice 42

charlie 103Want to see who has the most logins? Add the -r flag:

sort -k2 -nr logins.txtSorting CSV Files

For CSV files or any custom delimiter, use the -t option to specify the field separator:

sort -t, -k3 -n data.csvThis sorts a CSV file by the third column numerically, using comma as the delimiter. I use this pattern constantly when processing exports from monitoring tools or databases.

Pro Tip: When sorting by multiple columns, you can specify multiple -k options. For example, sort -k1,1 -k2n sorts by the first field alphabetically, then by the second field numerically for lines where the first field is identical. This is incredibly useful for sorting log files by timestamp and then severity.

Advanced Sort Techniques That Save Time

Once you’ve mastered the basics, these advanced patterns will make your life easier.

Month-Based Sorting

The -M option sorts by month name, which is perfect for log files with month abbreviations:

sort -M -k2 logfile.txtThis recognizes month names like Jan, Feb, Mar and sorts them chronologically rather than alphabetically.

Checking If a File Is Already Sorted

Use -c to check if a file is already sorted without actually sorting it:

sort -c sorted_file.txtIf the file isn’t sorted, sort will tell you where the first out-of-order line is. This is useful in scripts where you need to verify data integrity.

Ignoring Case

The -f flag (fold) ignores case differences when sorting:

sort -f mixed_case.txtWithout this, uppercase letters sort before lowercase letters due to ASCII ordering, which can produce unexpected results.

Combining Sort with Other Commands

Sort really shines when you pipe it with other tools. Here are patterns I use weekly.

Finding the Top 10 Resource-Consuming Processes

Using top command output or ps:

ps aux | sort -k4 -nr | head -10This sorts by the 4th column (memory usage) in reverse numeric order and shows the top 10 results.

Counting and Sorting Duplicate Entries

This is my go-to pattern for analyzing logs:

sort access.log | uniq -c | sort -nrHere’s what happens: first sort groups identical lines together, then uniq -c counts occurrences, and finally sort -nr arranges them by count in descending order. Perfect for finding which IP addresses are hitting your server most frequently.

You can read more about the differences between sort -u and sort | uniq to understand when to use each approach.

Sorting Journalctl Output

When you’re analyzing system logs with journalctl, you might want to sort filtered results:

journalctl -u nginx.service --since today | grep ERROR | sortThis extracts today’s nginx errors and sorts them, making patterns easier to spot.

Common Sort Pitfalls and How to Avoid Them

Let me save you some debugging time by highlighting the mistakes I’ve made (and seen others make) with sort.

Locale Settings Can Ruin Your Day

Sort behavior changes based on your system’s locale settings, specifically LC_COLLATE. In some locales, sort ignores punctuation and whitespace, which can produce baffling results.

If you need consistent, predictable ASCII-based sorting, set the locale explicitly:

LC_ALL=C sort file.txtThis uses the C locale, which sorts strictly by byte value. I learned this the hard way when a script that worked perfectly on my Ubuntu laptop broke mysteriously on a CentOS server. Locale differences were the culprit.

Don’t Redirect Output to the Input File

Never do this:

sort file.txt > file.txt # DON'T DO THIS!The shell truncates the output file before sort even starts reading, so you’ll end up with an empty file. Always use a different output file or use sort’s -o option, which handles this safely:

sort -o file.txt file.txt # This is safeRemember: Sort Doesn’t Modify the Original File

This is usually a feature, not a bug, but it catches people off guard. Sort prints to standard output by default. Your original file remains unchanged unless you explicitly redirect or use -o.

Performance Considerations for Large Files

When you’re sorting massive log files (think gigabytes), sort actually handles it pretty gracefully. It uses temporary files and external merge algorithms when necessary.

For huge files, you can help sort out by setting a larger buffer size:

sort -S 2G huge_file.txtThis allocates 2GB of memory for sorting, which can significantly speed up operations on large datasets. Just make sure you actually have that memory available.

Practical Real-World Examples

Let me wrap up with a few complete examples from actual work scenarios.

Finding Duplicate IP Addresses in Apache Logs

awk '{print $1}' /var/log/apache2/access.log | sort | uniq -dThis extracts IP addresses (first field) using awk, sorts them, and shows only duplicates with uniq -d.

Sorting Configuration Files for Version Control

sort -o /etc/hosts /etc/hostsKeeping config files consistently sorted makes diffs cleaner and merge conflicts less likely when managing infrastructure as code.

Identifying the Largest Directories

du -sh /home/* | sort -h -r | head -5Shows the five largest directories under /home, sorted by human-readable size in descending order. Essential for troubleshooting disk space issues.

Quick Reference: Essential Sort Options

Here’s a cheat sheet of the flags I use most often:

| Option | Purpose |

|---|---|

-n | Numeric sort |

-r | Reverse order |

-u | Remove duplicates |

-k N | Sort by field N |

-t DELIM | Use DELIM as field separator |

-h | Human-readable numeric sort (1K, 2M, 3G) |

-f | Ignore case |

-M | Sort by month name |

-o FILE | Write output to FILE |

-c | Check if already sorted |

For complete documentation, check the official sort manual page.

Final Thoughts

The sort command is one of those tools that seems trivial until you really need it. Once you understand numeric sorting, field-based sorting, and how to chain it effectively with pipes, you’ll find yourself reaching for it constantly.

Start with the basic examples I showed you, then gradually layer in the more advanced techniques as you encounter problems that need them. The beauty of Unix tools like sort is that they do one thing well and combine elegantly with sed, grep, and other text processing commands.

And remember: always use -n when sorting numbers. You’ll thank me later.

If you’re building your Linux command-line skills, you might also want to explore finding files with the find command or learn more about automating tasks with cron jobs. These tools work together to make you significantly more efficient at the command line.